The evolution of football data

When Charles Darwin first wrote about evolution, he wrote about the beaks of finches. They had evolved to do different tasks to find food, some to root around finding insects and some to break open nuts. The ideas of evolution and natural adaptation were set in full motion, that different beings will adapt to new and different tasks in their quest for survival.

Football data is exactly the same.

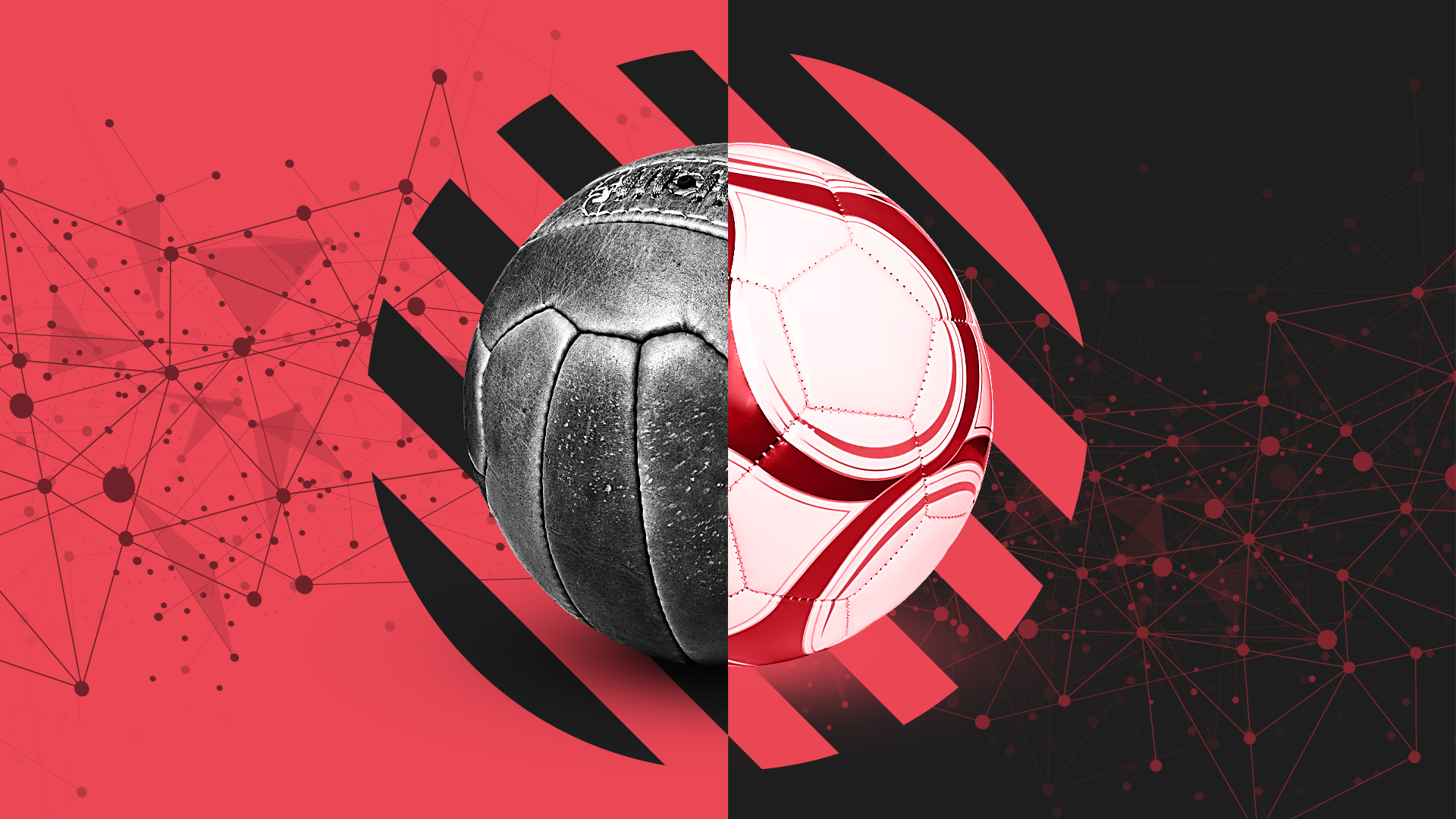

Collecting numerical information about football is now, in ‘data years’, many generations old. In its earliest days, in the mid-twentieth century, Charles Reep collected numbers of shots and length of passing moves. In the late 1990s, tracking data started to be developed. In the 2010s, expected goals entered the mainstream. Each stage marked a moment in the evolution of football data.

The thing about evolution, though, is that it’s never-ending, and it doesn’t have a perfect ‘end goal’ either. Football data is still evolving right now, and in some very interesting directions.

In recent years there have been three main divisions in how data was collected. ‘Event data’ was collected by people watching the games on television, noting ‘events’ like passes, tackles, and shots; ‘tracking data’ was collected with cameras and software to detect players’ locations multiple times per second; GPS units collected ‘physical data’ with metrics like distance run and accelerations.

There was little overlap between the three, in two senses. First, companies usually only collected one of these three forms of data. Second, the different types could be difficult to work with together. However, as this very blog post is being written, we’re in the middle of a new trend with both of those two things changing.

Businesses sometimes merge, which is one way that these different streams can be brought together. Meanwhile, stats around acceleration and high speeds — things traditionally associated with GPS data — are becoming standard for companies with camera-based tracking systems to produce. ‘Event’ data is even more interesting — it too is changing, but it’s changing from multiple directions.

On one side are the ‘event data’ collection companies, who are starting to collect things beyond the scope of ‘on-ball events’ — or, at least, beyond the scope of human collectors to collect. They’re adding these extra details to their data by using similar types of computer vision technology that camera-based tracking companies use to collect their data.

From the other direction, there is more and more ‘event’-type data coming from tracking data companies. It takes a significant amount of time and expertise to be able to determine, for example, the number of line-breaking passes from the masses of data this method produces. Instead of leaving it up to customers, the companies themselves break down the information into ‘event’-type feeds.

These two types of data source, once fairly separate, are evolving closer together. Like the Galapagos’ finches, their beaks are adapting to the richest food source. They will keep on adapting too, optimising for the new markets as time goes on. However, there are still some areas in the present ecosystem that can be adapted to.

Data engineering (like building the warehouse that the data goes in) is a necessity, but is still an underappreciated one by some decision-makers in the professional game. The bosses may be intrigued and excited by the possibility of their analysts having the latest tracking data, but if the databases and processes aren’t right then it’s like buying a Ferrari without having any roads to drive it on.

Some data providers have their own software that people can use, which is one way around this. Less engineering is required and anyone who can use a computer can access the data, not just the data scientists who know how to code.

However, with the competition between collection companies, there’s little incentive for some providers to make their data work nicely with other sources, particularly within these types of individual software programmes.

These are all problems that Twenty3 are working to address, in the data-agnostic approach to the Toolbox and the scalable Sports Data Platform that powers it. If you can easily spin up a database system and ways of importing different data sources, that would soothe a lot of the problems for data professionals in the game.

The trend of data sources becoming more intertwined and required to exist side-by-side will only continue. Recruitment departments have long needed to get things like contract information alongside on-pitch metrics, something that can be a painful process to set up and maintain. And that’s just one example, the amount of data collected on players seems set to keep increasing too.

Back to the on-pitch action, collection will keep evolving as well. We may only have scratched the surface of the hybridisation between different types of data. For example, let’s bring the traditional physical metrics into it: could there be some value to seeing take-on or passing success rate at different speeds? Or perhaps the number of sprints that are specifically in a counter-pressing phase of play? Physical data has tended to be left at distance run and number of accelerations, but it feels like an area ripe for further study and innovation.

The advantage that football has over the Galapagos finches is that humans can do things like create computer vision technology while birds can’t create new beaks. The only disadvantage? Articles about finches go out of date a lot less quickly than those about the lay of the land of football data.

We help our customers maximise the potential of football data. Whether you’re a data novice or expert, the Twenty3 Toolbox gives you the tools to do your job quicker and better.

If you think your organisation – whether in the media, broadcast, agency or pro club sector – could benefit from our product, you can request a demo here.